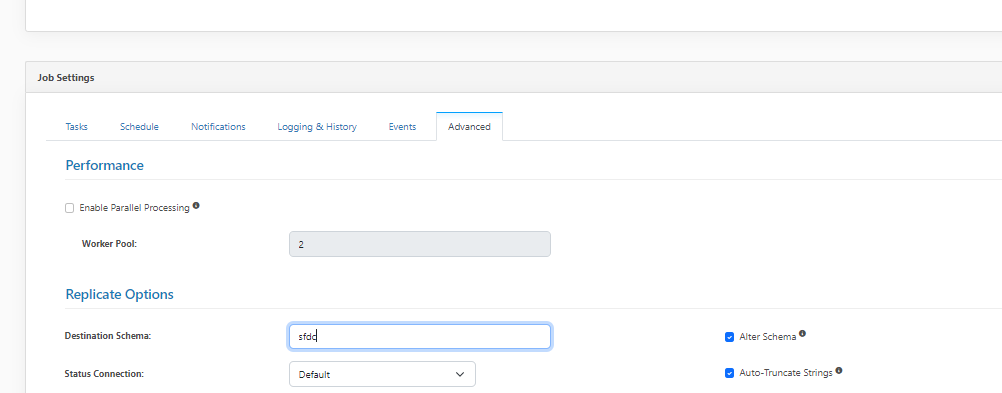

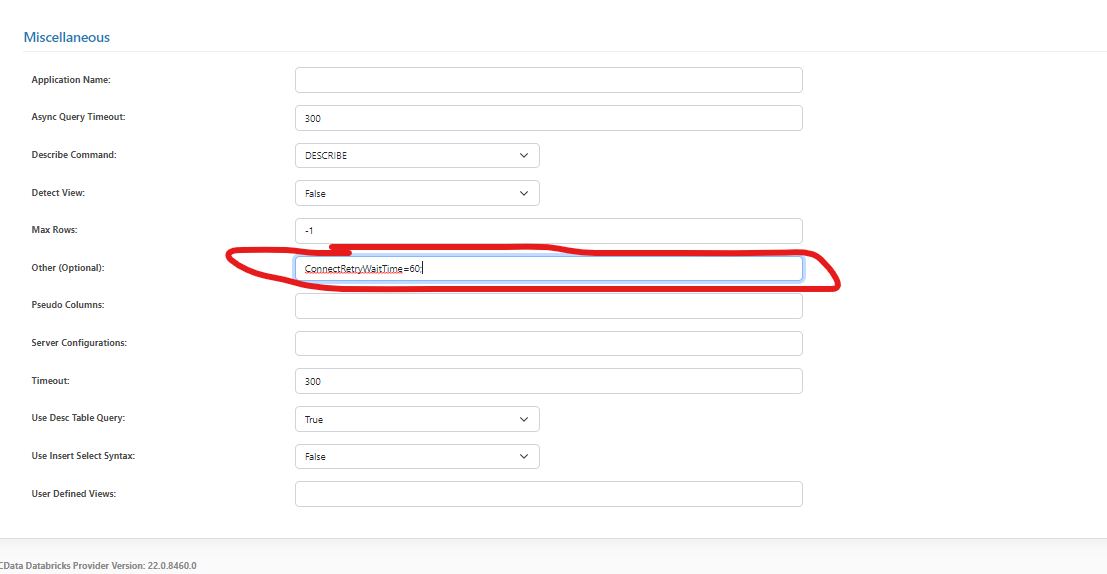

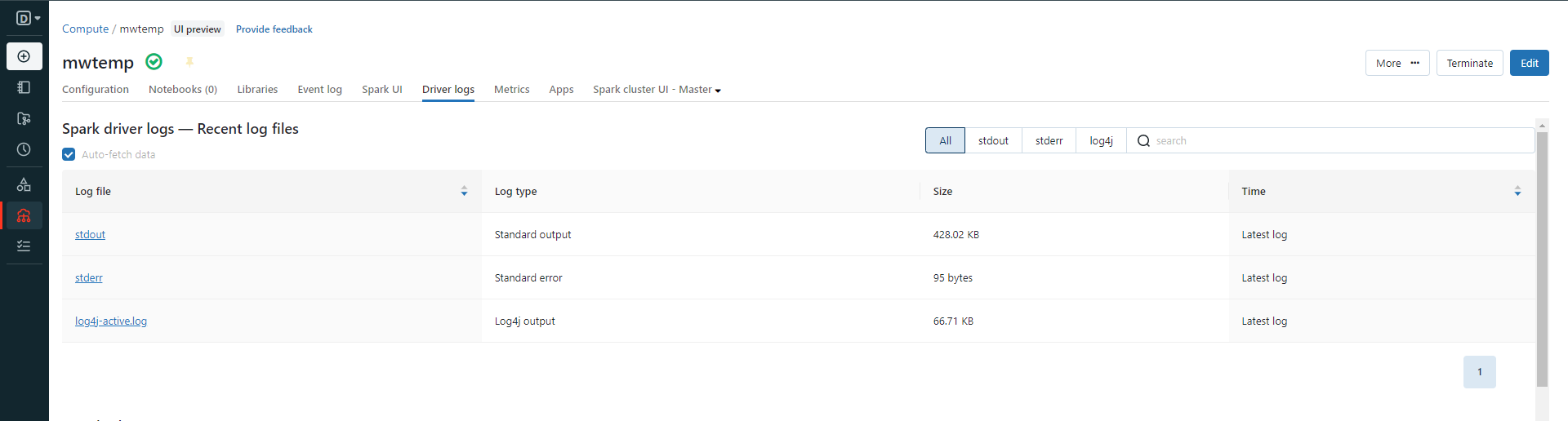

I’m having an error with Sync timing out Databricks clusters when starting up, I’ve tried modifying the job timeout and connection timeout but haven’t been able to solve it.

I’m also getting “Failed to check the directory status” 500 errors, what’s going on?