Hi @quangdutran809

While it is true that the default setting for the EnableMetadataAPI is True for the driver that does not neccesarily mean that the driver will use it automatically. From my understanding, you can only use it if it is enabled in your account (it might require you to have an Enterprise subscription). If you are authenticating via a PersonalAccessToken you will need to make sure it has the 'schema.bases:read' scope. Can you confirm this?

Also, did you try specifying the BaseId and the name of the Table in question just in case?

https://cdn.cdata.com/help/JAJ/jdbc/RSBAirtable_p_EnableMetadataAPI.htm

However if using MetadataAPI is not an option, I think you can first disable the EnableMetadataAPI in the driver and consider the other options included earlier.

Regarding RowScan did you actually try adjusting the RowscanDepth value? If so, what happens?

As for the last option, this simply requires you to add the following in the connection string:

jdbc:airtable:PersonalAccessToken=xxxx;......;Other=GenerateSchemaFiles=OnStart;

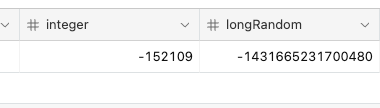

After testing the connection and trying to load the tables you will notice that schema files have been generated for all the tables available in the following location %APPDATA%\CData\Airtable Data Provider\Schema. Navigate to this location and open the TableName.rsd file in a text editor and there you can actually change the type to an integer for example. Save the changes try loading the table again.

Let me know if this works.