Apache Kafka is an open-source stream processing platform that is primarily used for building real-time data pipelines and event-driven applications. When paired with the CData JDBC Drivers, Kafka can work with live data from the specified provider. While configuring our provider’s data into Apache Kafka, several errors may occur along the way. Below we will demonstrate some of these errors:

-

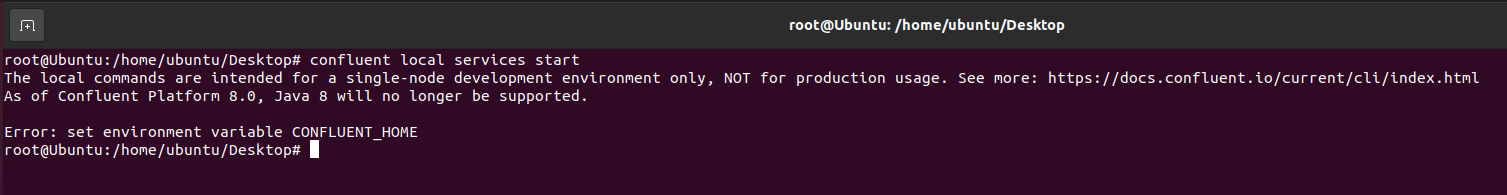

Error: set environment variable CONFLUENT_HOME

When using the following command:

confluent local services start

We get the following error message:

The local commands are intended for a single-node development environment only, NOT for production usage. See more: https://docs.confluent.io/current/cli/index.html

As of Confluent Platform 8.0, Java 8 will no longer be supported.

Error: set environment variable CONFLUENT_HOME

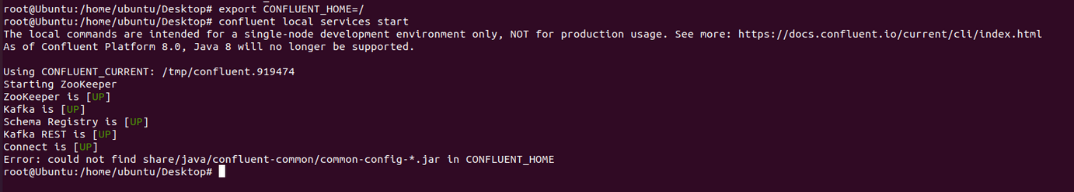

To solve this issue, we need to set the CONFLUENT_HOME variable, which by default should be set to / (dash) since this command line points to /ect/kafka/zookeeper.properties.

To do so, we need to use the following command:

export CONFLUENT_HOME=/

-

Error: could not find share/java/confluent-common/common-config-*.jar in CONFLUENT_HOME

If you stumble upon this particular error message:

The local commands are intended for a single-node development environment only, NOT for production usage. See more: https://docs.confluent.io/current/cli/index.html

As of Confluent Platform 8.0, Java 8 will no longer be supported.

Using CONFLUENT_CURRENT: /tmp/confluent.919474

Starting ZooKeeper

ZooKeeper is [UP]

Kafka is [UP]

Schema Registry is [UP]

Kafka REST is [UP]

Connect is [UP]

Error: could not find share/java/confluent-common/common-config-*.jar in CONFLUENT_HOME

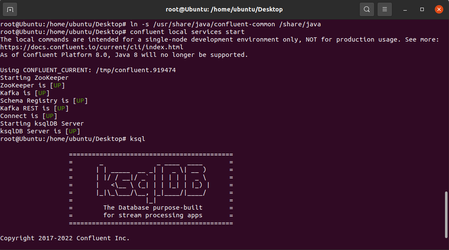

This means that the ksqlDB Server is not targeted correctly. This issue occurs because the confluent-common is in another path, while the ksqlDB server points to another path. To solve this issue, we need to create a symlink. Symlink is similar to Windows's concept of shortcuts. It practically links a path to point to another path on your machine. Since our file is located in the following path /usr/share/java/confluent-common and the target is the /share/java path, we need to utilize the following command:

ln -s /usr/share/java/confluent-common /share/java

-

Make sure the services are UP and running

After the confluent local services start command, when the services are running, utilize the confluent local services status command to ensure they are up and running.

This was the case with the ZooKeeper service. At first, it looked as if the service was running, but after double checking, the service was DOWN. In that case, you can either check if the port that the service is trying to occupy is already being used, and kill said process, or simply change the port the service will occupy.

-

The io.confluent.connect.jdbc.JdbcSourceConnector class is not found

Under the Define a New JDBC Connection to Amazon Athena data section of our documentation (Stream Amazon Athena Data into Apache Kafka Topics), if the command in the 8th step does not work: Create the Kafka topics manually using a POST HTTP API Request:

curl --location 'server_address:8083/connectors'

--header 'Content-Type: application/json'

--data '{

"name": "jdbc_source_cdata_amazonathena_01",

"config": {

"connector.class": "io.confluent.connect.jdbc.JdbcSourceConnector",

"connection.url": "jdbc:amazonathena:AWSAccessKey='a123';AWSSecretKey='s123';AWSRegion='IRELAND';Database='sampledb';S3StagingDirectory='s3://bucket/staging/';",

"topic.prefix": "amazonathena-01-",

"mode": "bulk"

}

}'

And you get the 500 error that the io.confluent.connect.jdbc.JdbcSourceConnector class is not found, this is because the Confluent Hub client needs to install the JDBC Source Connector. To resolve this issue, utilize the following command (upon completion, restart the service):

confluent-hub install confluentinc/kafka-connect-jdbc:latest

-

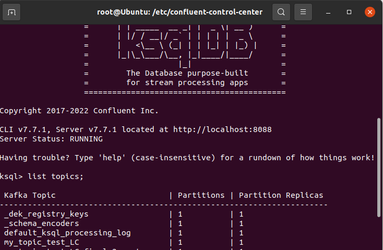

Topic not listed in the ksql command list topics;

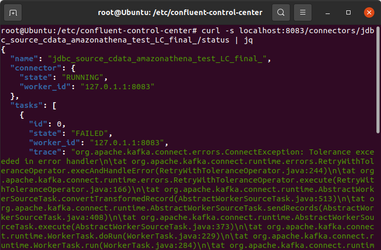

If for some reason the connector has been successfully added to the list (which you can see online by going to localhost:8083/connectors) but the topic is not added to your service when you list your topics, that means that something is wrong with the POST HTTP API request.

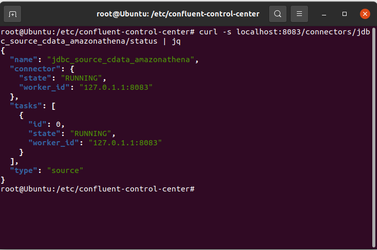

To check that, simply use the following command:

curl -s localhost:8083/connectors/<connector_name>/status | jq

For example:

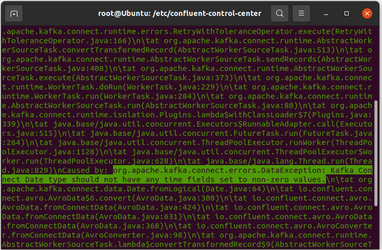

This error states that the table that I am trying to fetch data from is throwing a data exception:

Therefore, after changing the request and selecting another table (in this case, customers) by adding the "table.whitelist": "customers" to the request, now everything is running as expected:

Additionally, the topic has been added to the service:

Please reach out to [email protected] if this does not resolve the problem for you.